Large Language Models and Artificial Intelligence

Binjian Xin | 2023-03-13

Table of Contents

Overview

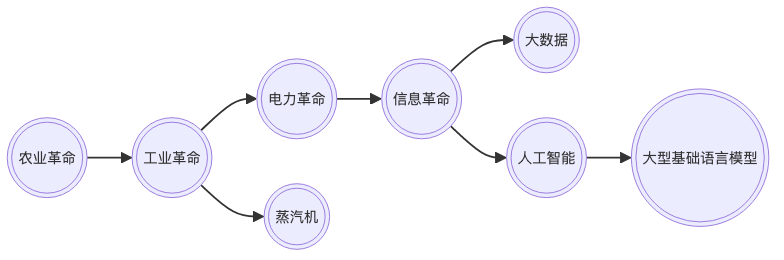

Technological Advancement

- The emergence of new technologies leads to social progress.

- Artificial intelligence is hailed as the electricity of the new era.

- Jordan Tigani Big Data is Dead

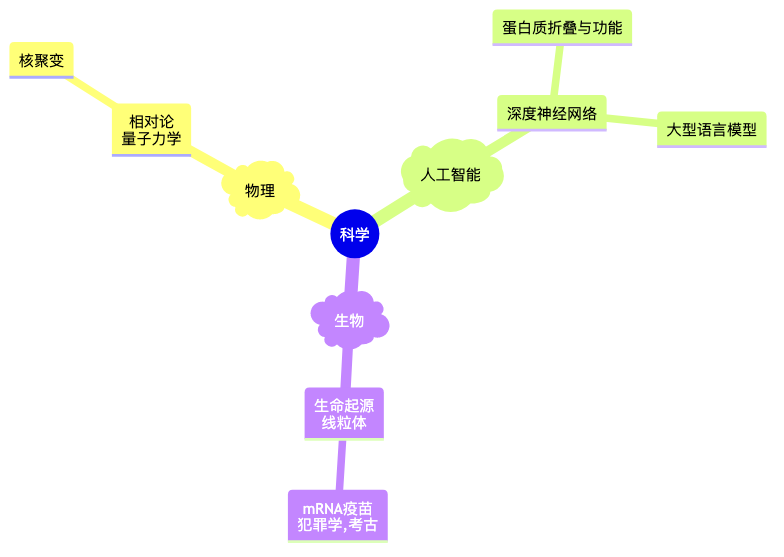

Updating Scientific Concepts

- Three areas have undergone huge, lasting, and profound changes.

- The deeper the understanding of principles, the greater the impact of applications.

What is ChatGPT?

Chat Generative Pretrained Transformer

- Essence: Intelligence transformed into computation

- Basic object of calculation: embedded space(embedding)

- Machine learning methods

- Characteristics

- Large scale

- Single method (deep learning Transformer architecture)

- Multilingual mode

- Strong artificial intelligence (AGI?)

- Open source and open

- Transferable: image, control

- Serendipity

Ilya Sutskever NIPS 2015

- If the dataset is large enough

- And train a large neural network

- You will definitely succeed!

Large Language Models

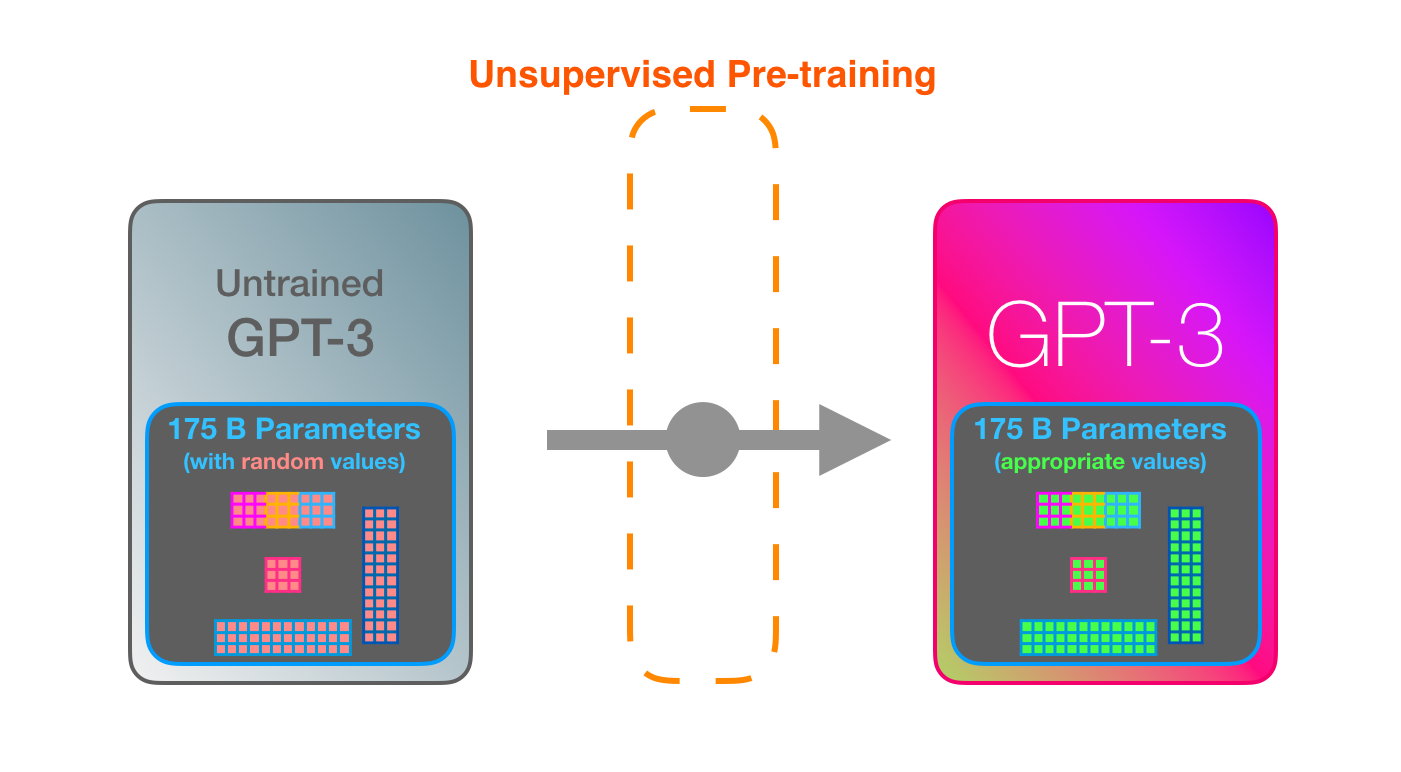

- GPT Series

- GPT2 (1.5B), GPT3 (175B), InstructGPT(Alignment, RLHF), ChatGPT(Data collection differences), GPT4(?)

👉 NanoGPT (Andrej Karpathy)

- ChatGPT for Slack

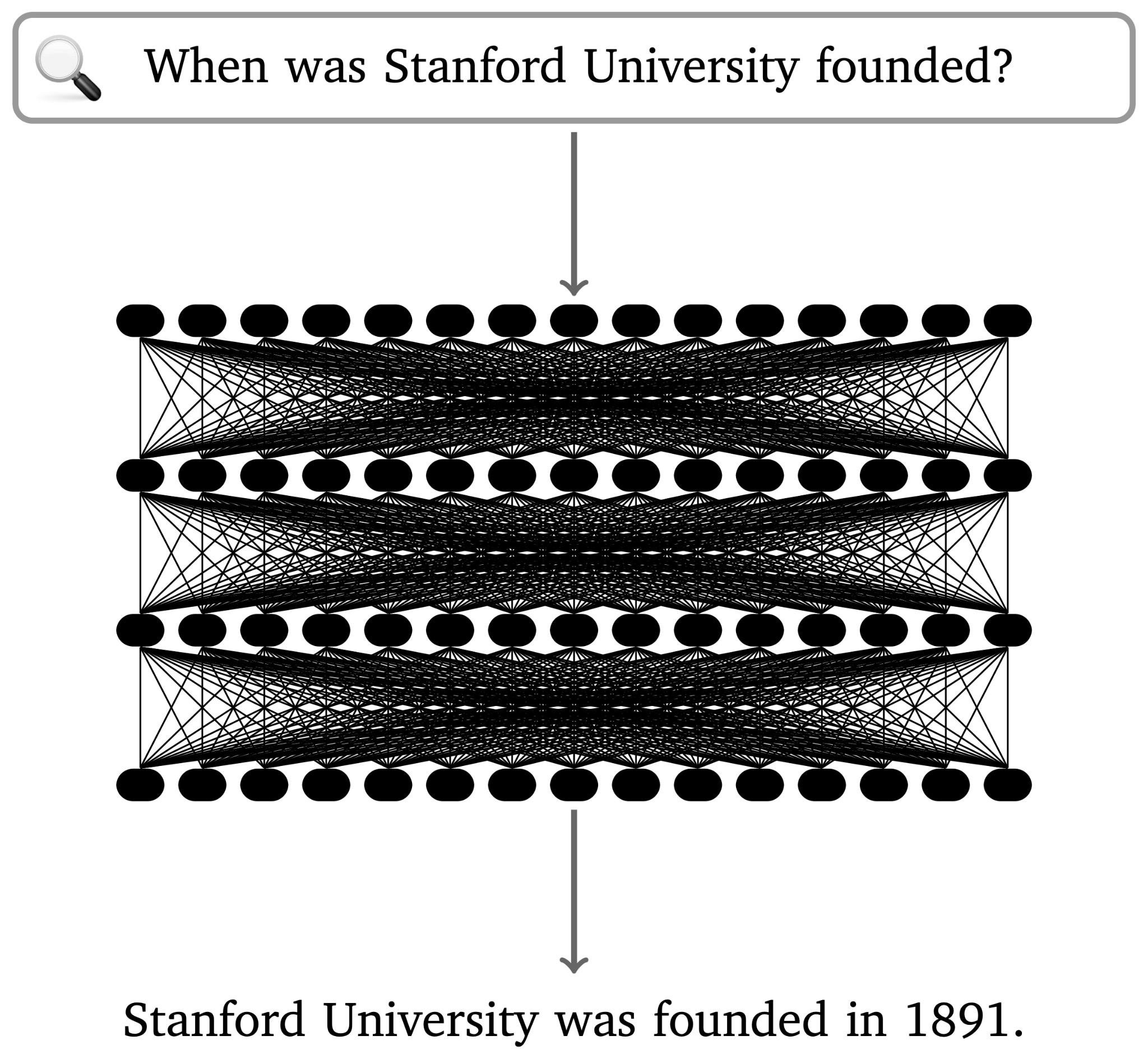

Neural Network as a Large Language Model

- ChatGPT for Slack

- GPT2 (1.5B), GPT3 (175B), InstructGPT(Alignment, RLHF), ChatGPT(Data collection differences), GPT4(?)

👉 NanoGPT (Andrej Karpathy)

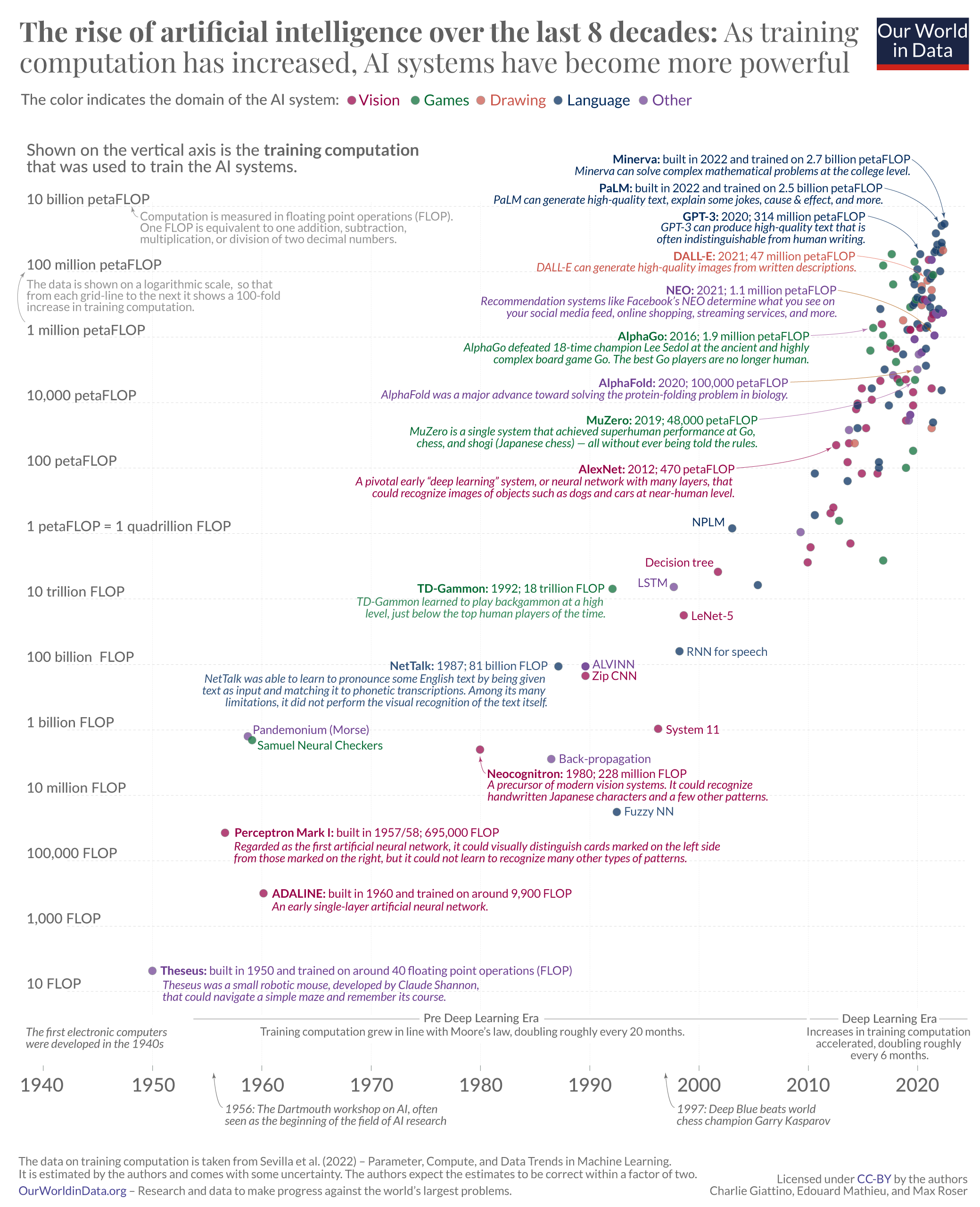

Large Language Models and Training Computational Power

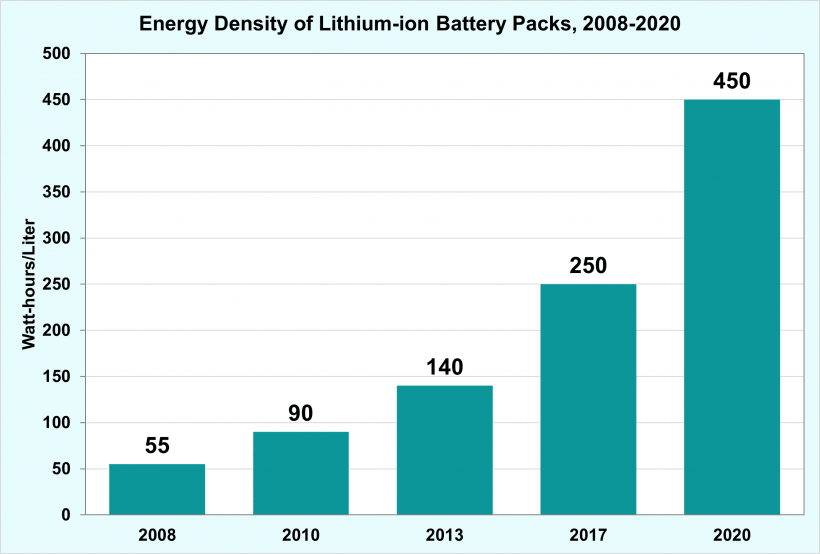

Energy Density Improvement of Lithium Batteries

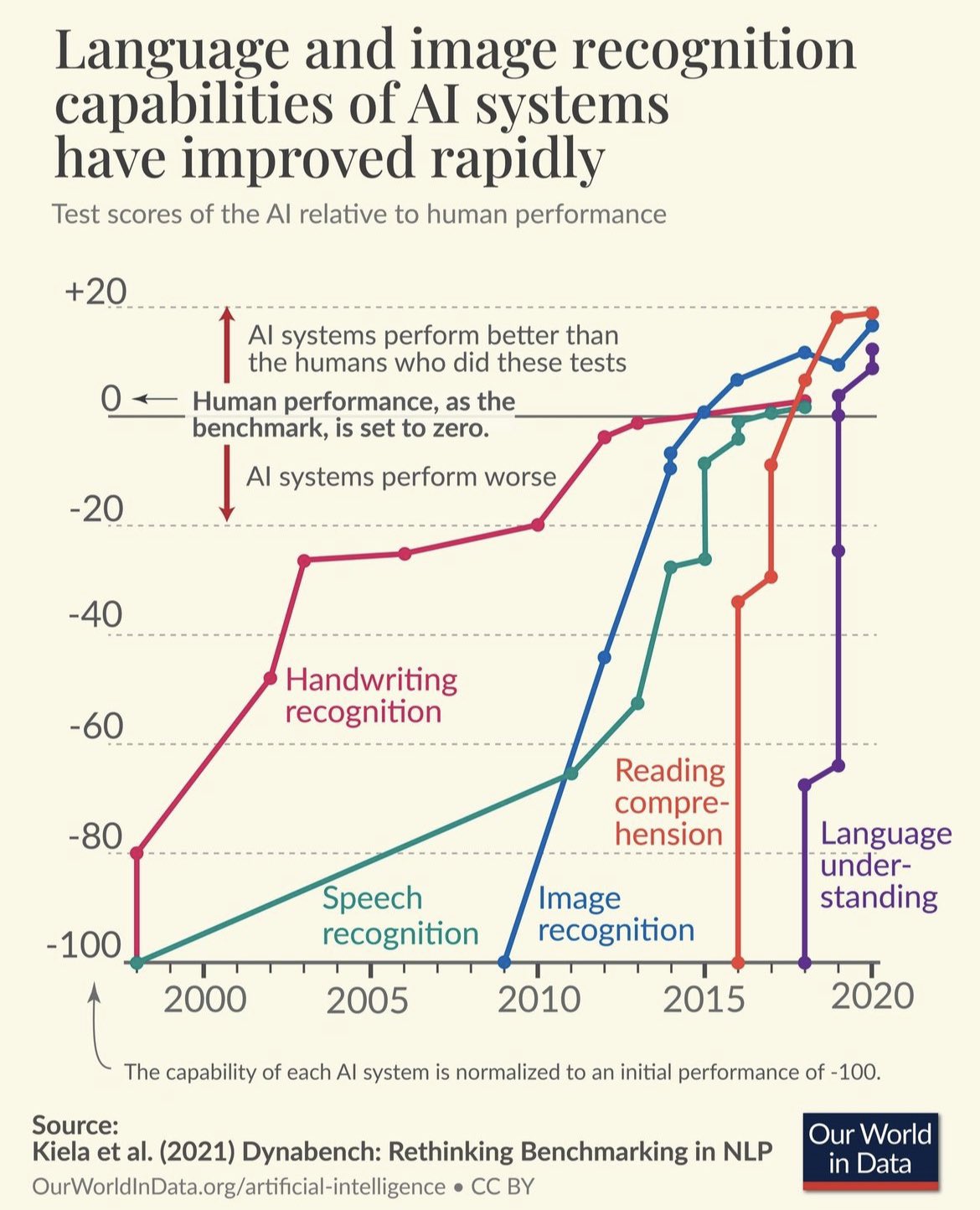

Improvement in Large Language Model Capabilities

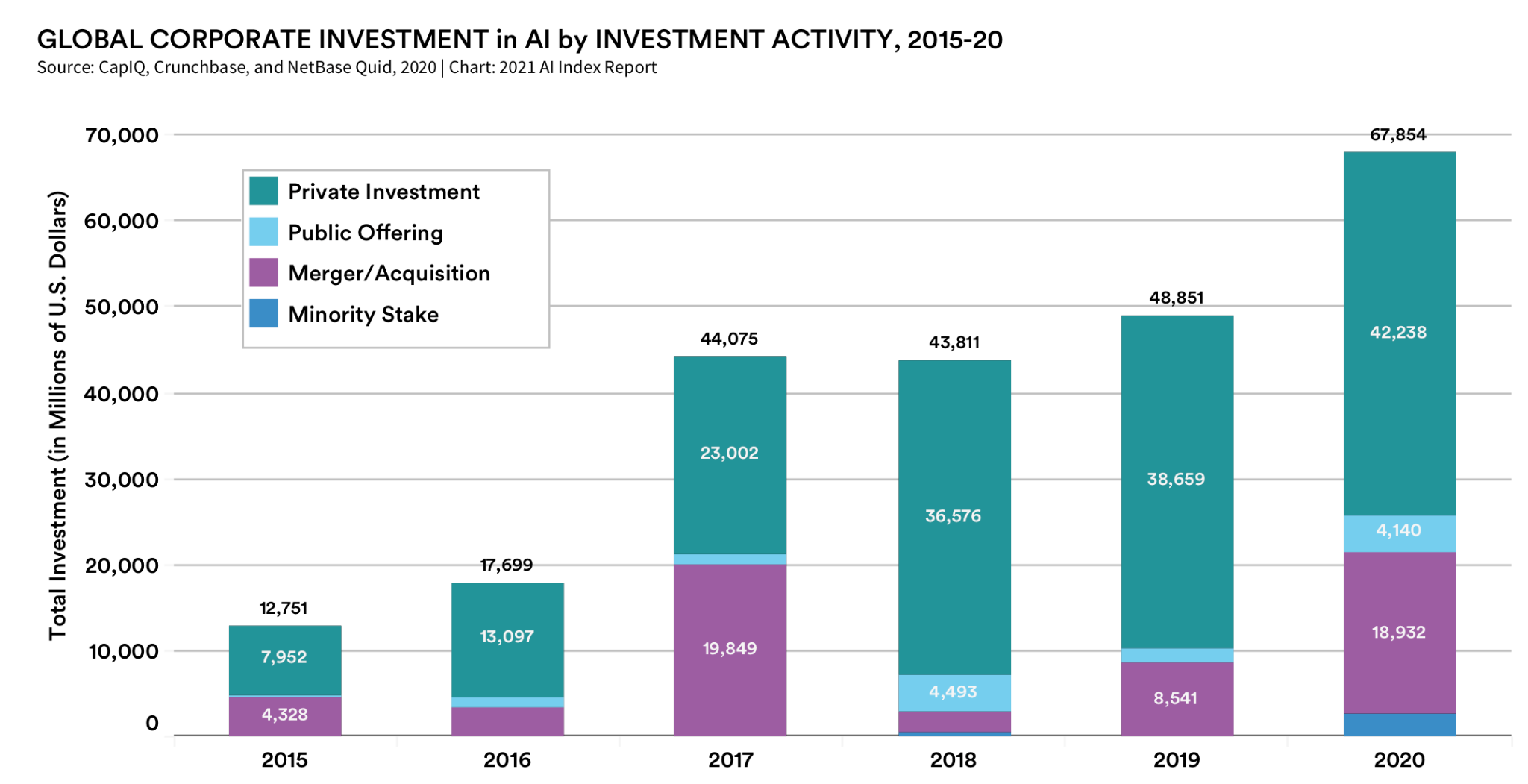

Social Impact

- Microsoft invests in OpenAI

- Competition: Microsoft(Sydney), Google(LLaMDA,Bard),Meta(Galactica,LlaMa),GPT4

- Intelligence, Agency, Sentience, Conciousness, Will

ChatGPT’s False Promises

It can be used to solve problems, but its concept of language and knowledge is fundamentally flawed.

Yoshua Bengio

ChatGPT is impressive, but scientifically just a small step, at best it is an engineering advance.

Engineering Implementation of Large Language Models

Use Cases

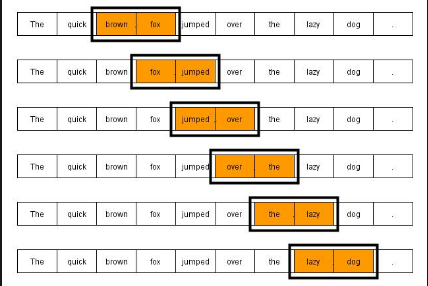

Language Encoding Models: Morphemes and n-grams (n-gram)

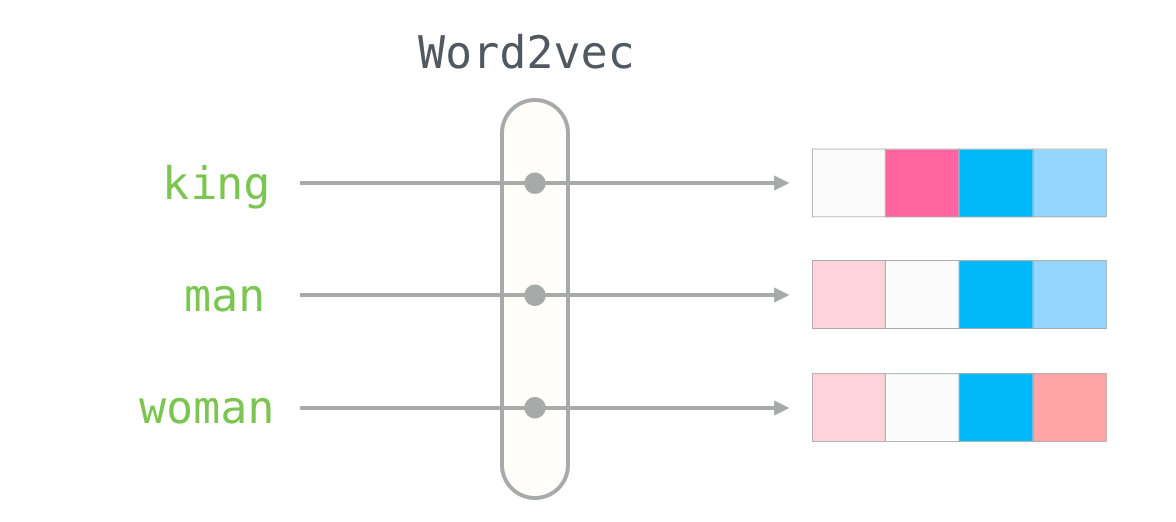

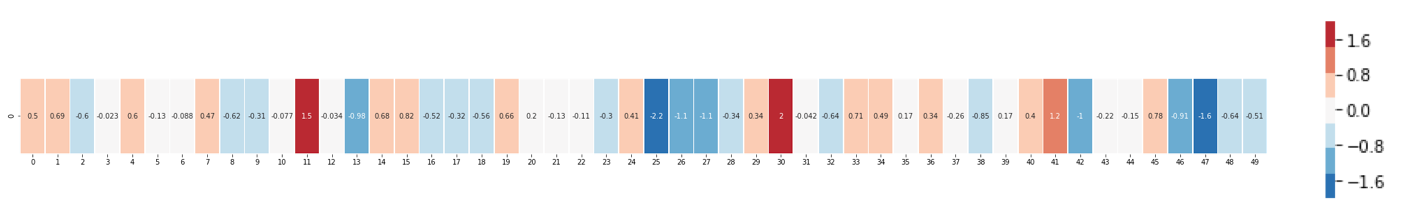

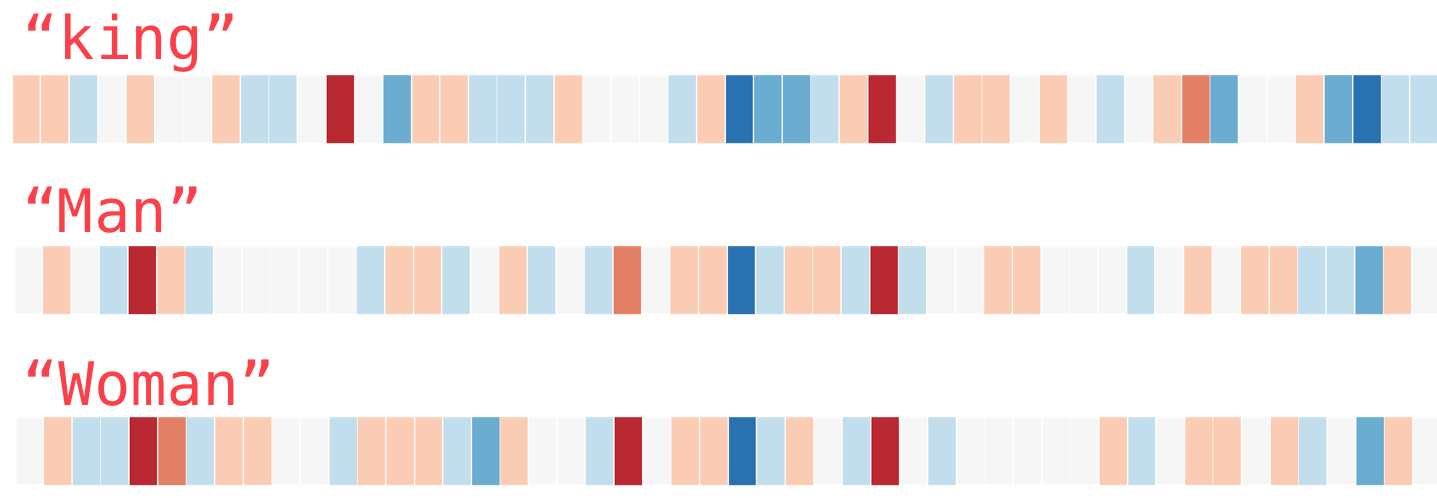

Computational Objects in GPT: Embedding (embedding)

- Embedding (encoding of words/morphemes)

- Independent semantics, repeated in different positions in sentences/texts, reusable variables

- Corresponds to qualia (Quolia): The clustering of concepts (colors) in consciousness, language is just an interface

- Mutual relationships are confirmed by calculation.

- Learn through training samples, collect semantics determined by syntax

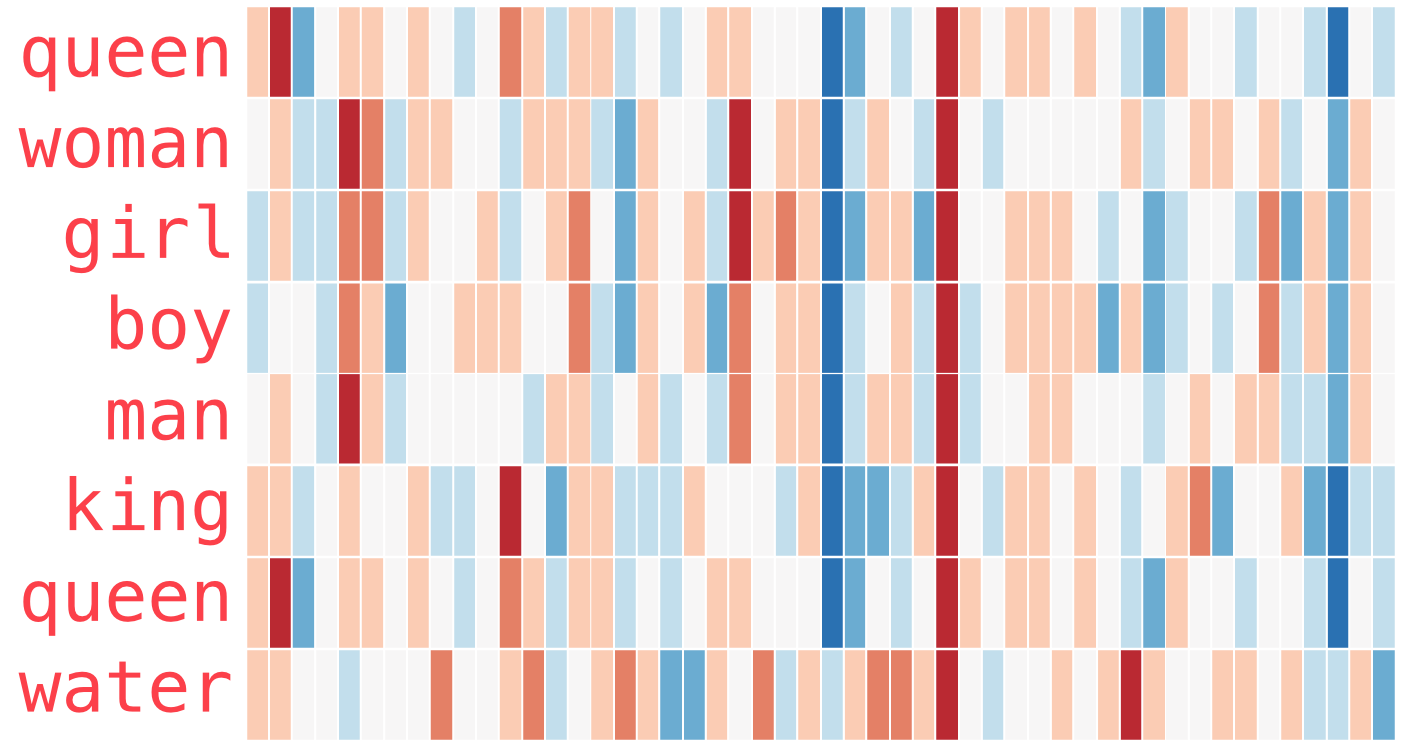

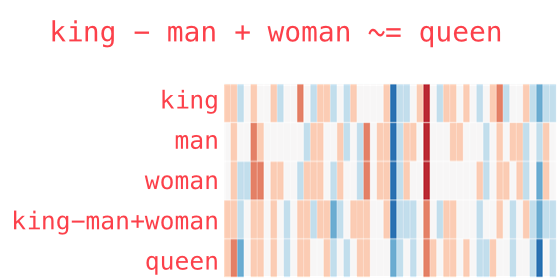

Operations of Embedding (embedding)

Clustering of Embedding (embedding)

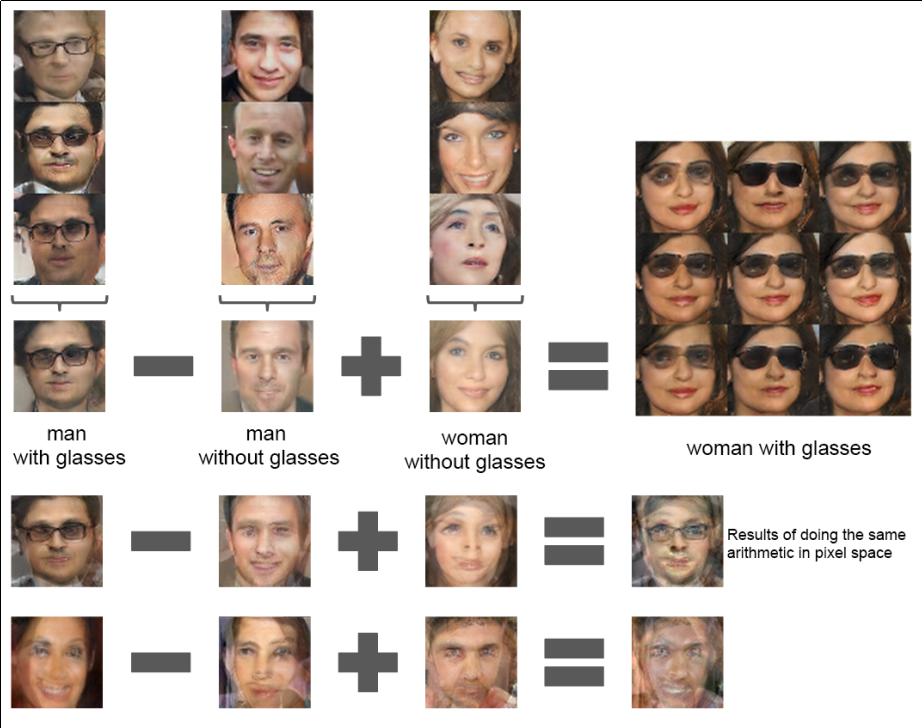

Embedding in Images

- Image embedding encoding and decoding, obtained through DCGAN training

- Interpolation of embedding parameters: continuous change of images (male -> female)

- Vector operation of embedding: modification of images

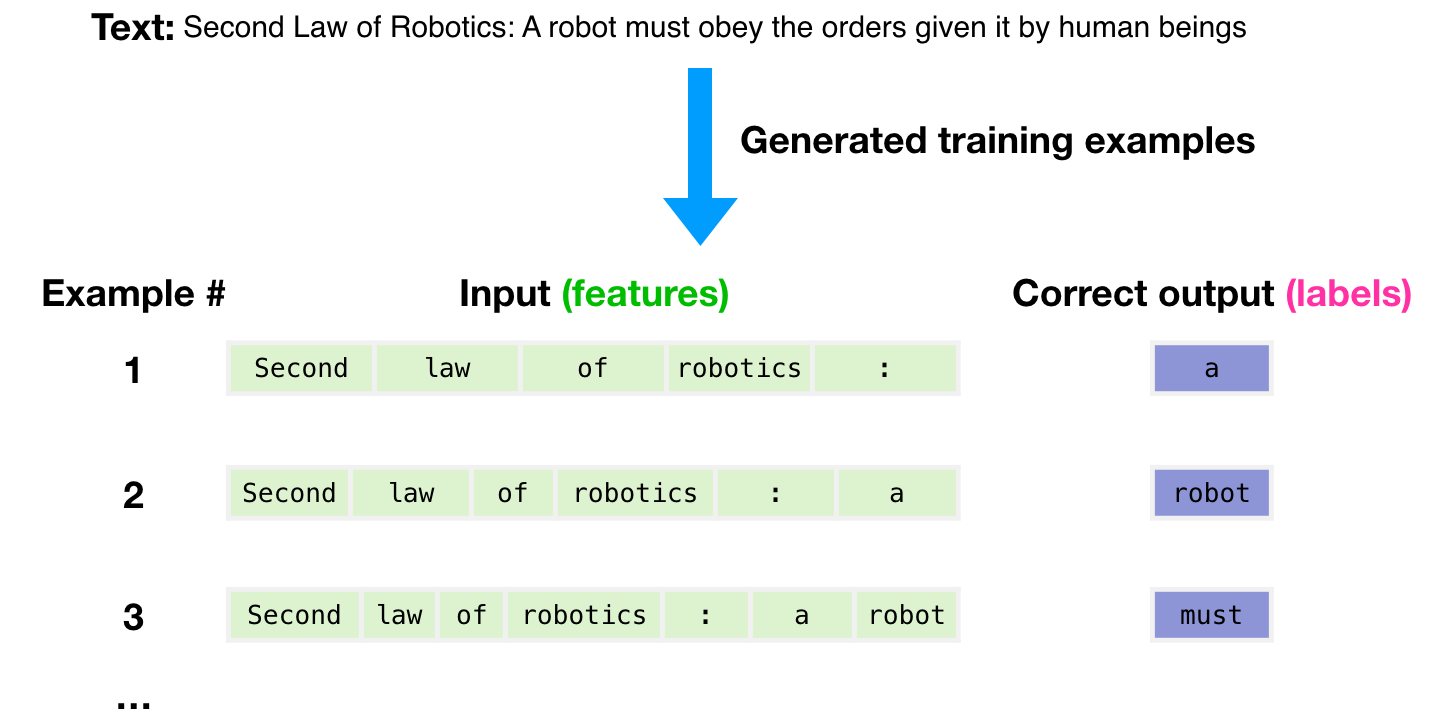

GPT3 Training

GPT3 Sample Input

GPT3 Inference

GPT3 Context and Embedding

GPT3 and Transformer

GPT3 Applications

ChatGPT

- GPT3.5: codex

- Supervised learning, fine-tuning

- Reinforcement learning (PPO) constructs a reward function

- Train improved models using reinforcement learning

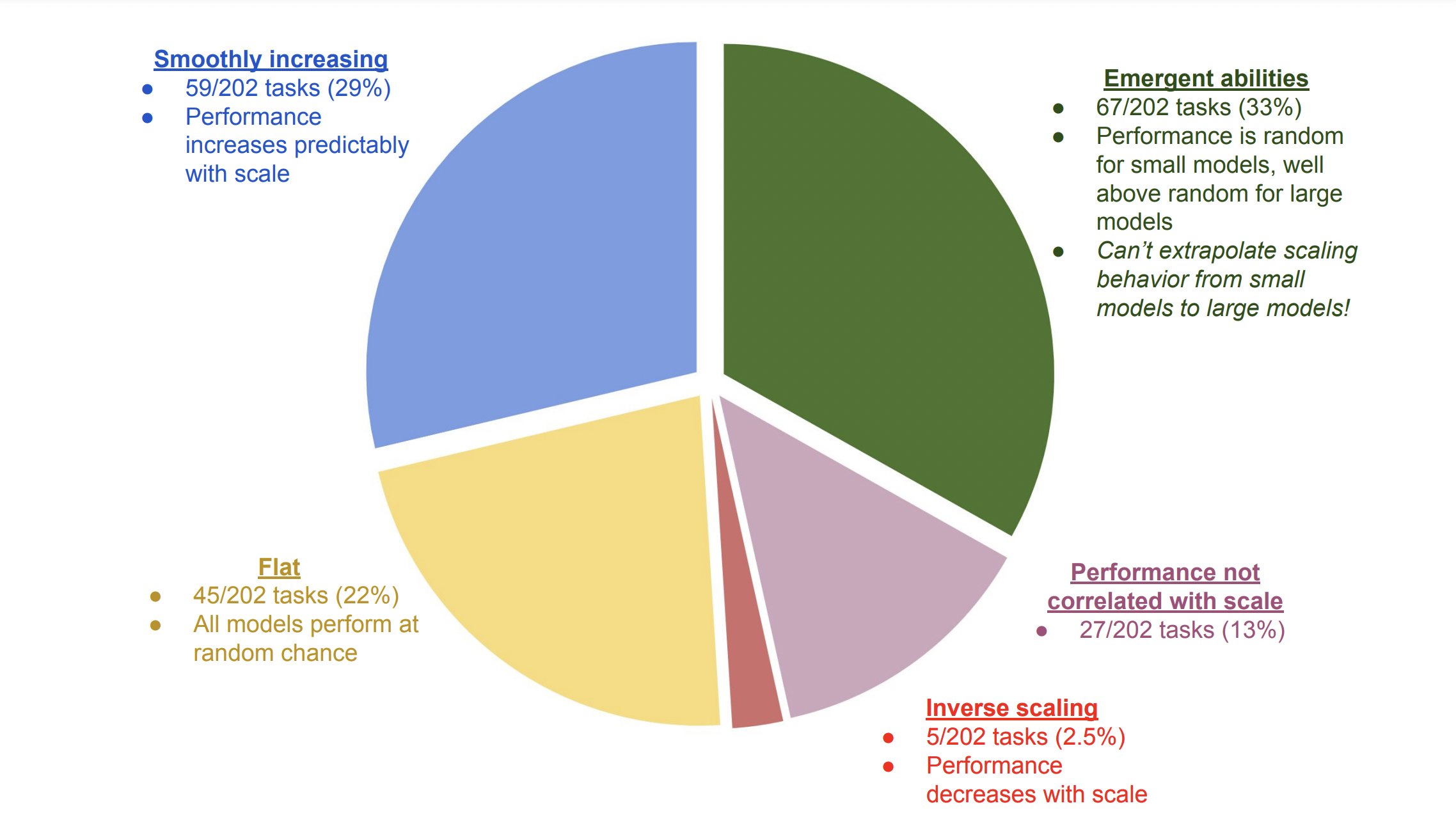

Emergent Behavior ([Emergence Behavior)

Application and Deployment

- Prompt Engineering

- LLaMA Remakes GPT (Stanford Alpaca 7B, $100)

- Train by API comparison ➡ Business model?

- LLaMA (7B) Raspberry Pi port (4GB, 10sec/token)

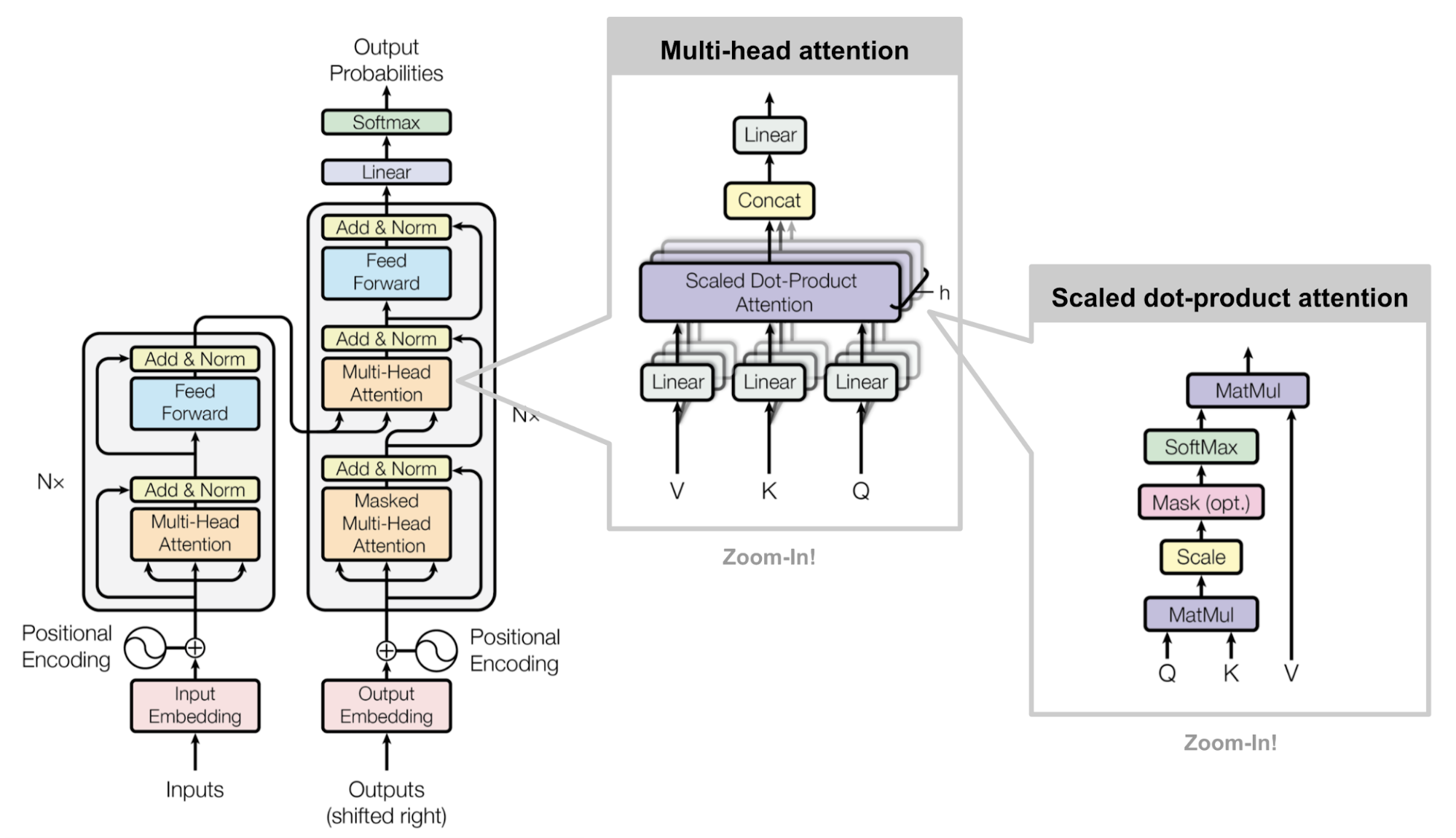

Transformer

- Structure: Less inductive bias, better generalization

- Attention: Self-attention, cross-attention, multi-head self-attention

- MLP, multilayer perceptron

- Residual structure

- Requires a large number of training samples

- Network scale and dataset

Disputes

ChatGPT is a Blurry JPEG of Reality

- Similar to lossy compression of original images with jpeg images

- Inaccurate description of reality leads to distortion and misinformation

- Model hallucination problem contaminates corpus and information

GPT4 and the Uncharted Territories of Language

“They (LLM) could also create new ethical, social, and cultural challenges that require careful reflection and regulation. How we use this technology will depend on how we recognize its implications for ourselves and others.

But when we let GPT4 do this for us, are we not abdicating our intelligence? Are we not letting go of our ability to choose, to pick out, to read? Are we not becoming passive consumers of language instead of active producers?”

GPT4 Response prompted by Jeremy Howard on 2023.02.23

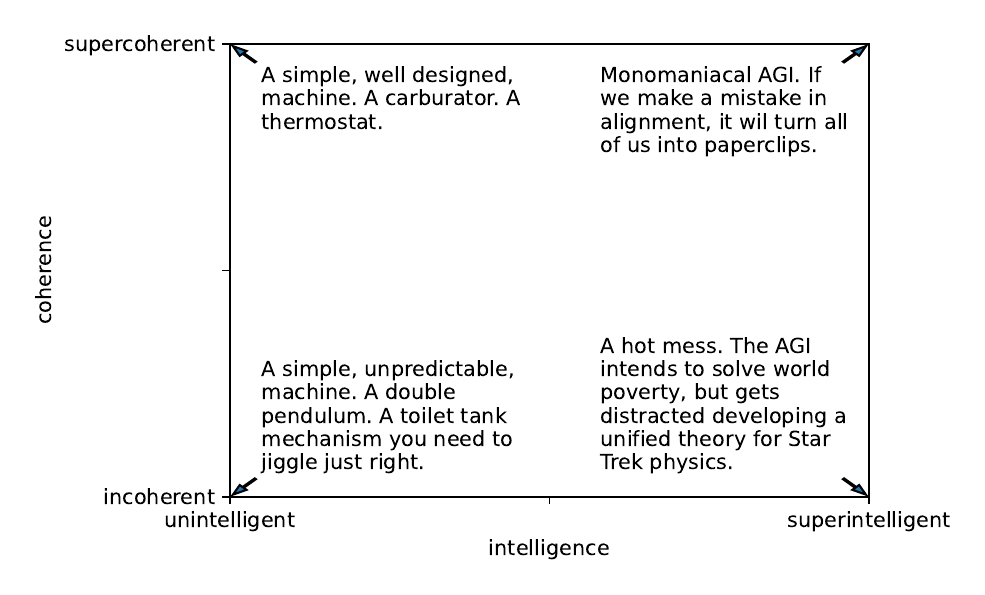

Intelligence and Coherence Issues

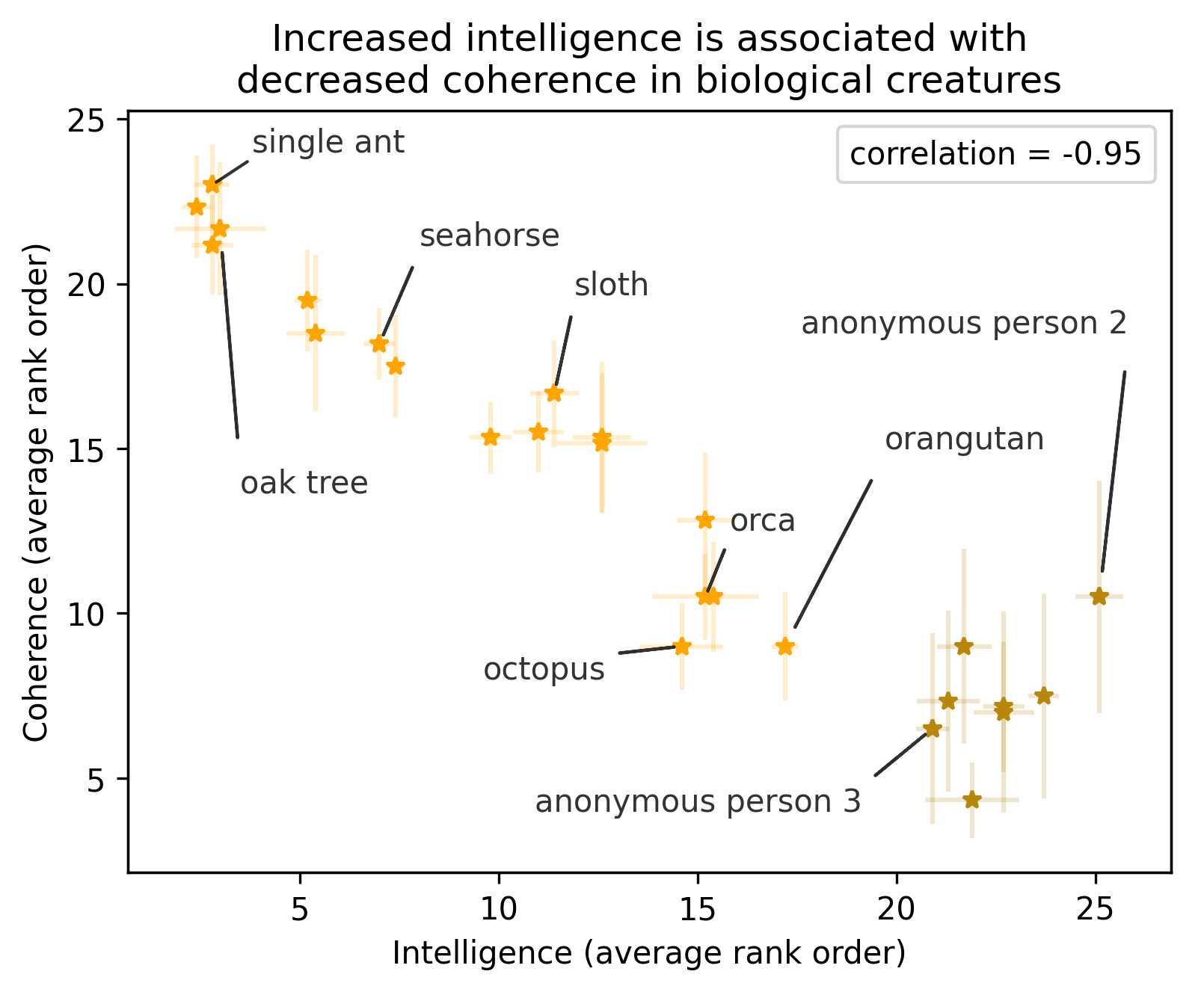

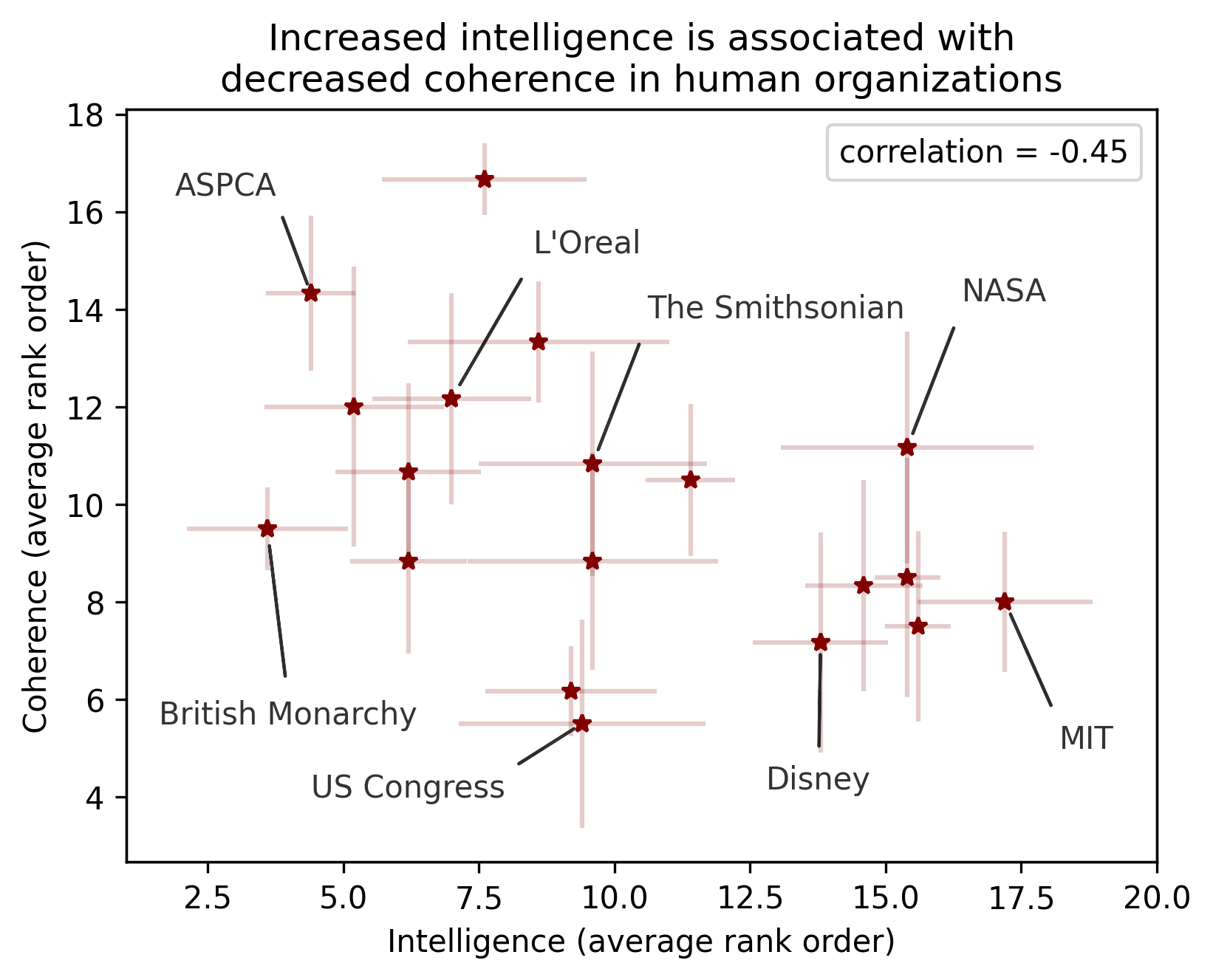

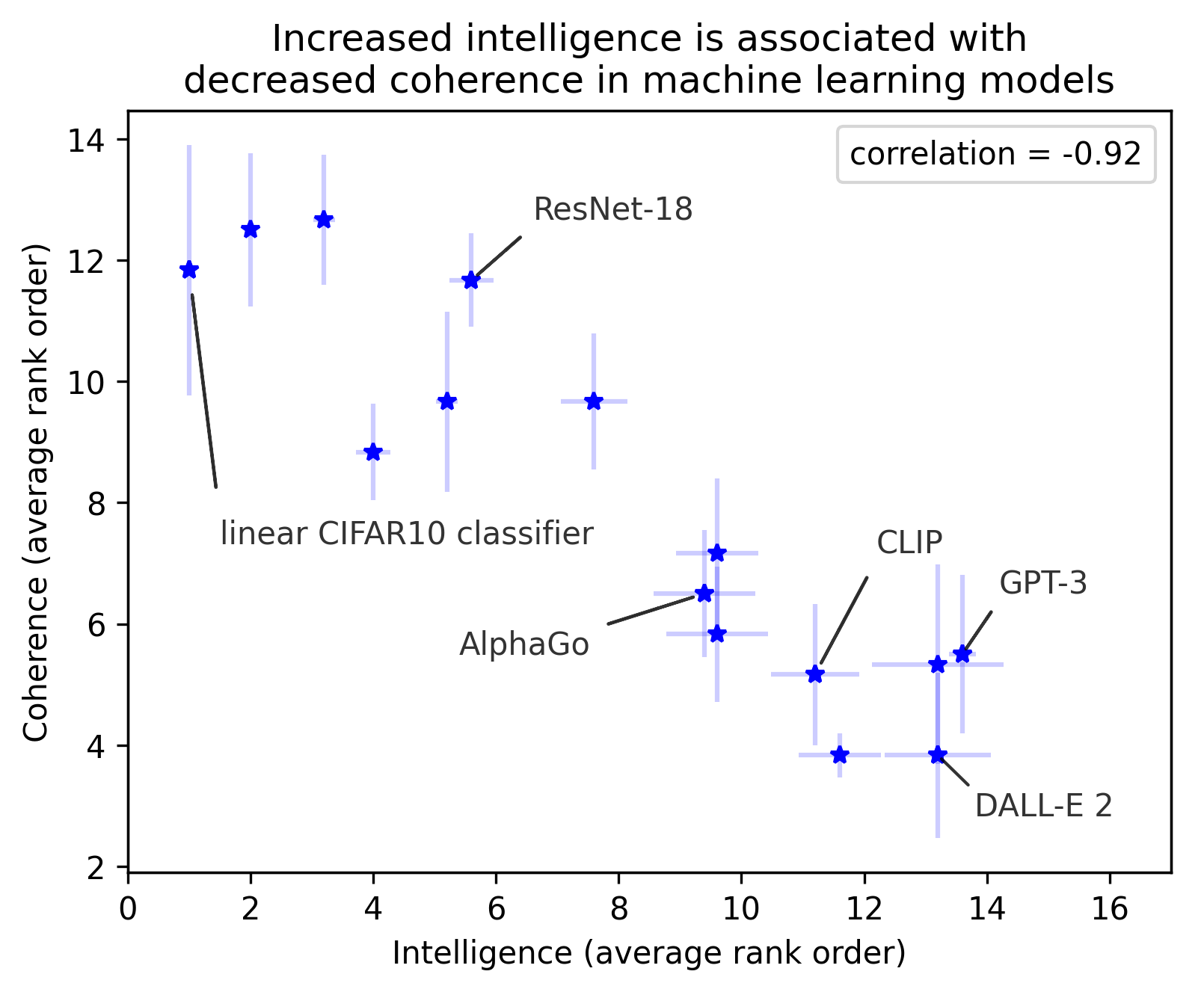

The Higher the Intelligence, the More Chaotic

Coherence of Neural Networks

Prospect and Challenges

- Efficiency, openness, origin, effectiveness, synthesis

- Retrieval-based (search-based) natural language processing

- “Last Mile” of Large Language Models

- Network structure understanding

- Maintenance, efficient updates

- Disadvantages

- Long paragraphs

- Long logical reasoning (chain-of-thought reasoning) 👉 Reinforcement learning?

- Contamination of natural language corpus space

Please translate the above markdown file in English to Chinese.