Fundamental Models and Applications

Xin Binjian | 2023-06-02

Table of Contents

Overview

(“Fundamental Model”, “Human Brain”) ∈ “General Intelligence”

- Neural network models embody intelligence different from humans.

- “You can’t cut butter with a knife made of butter.”

- “What is intelligence?” –> “What can intelligence do?”

Understanding is the Premise of Application

- ✅ Intelligence

- Intelligence itself: The choice of network architecture may be accidental.

- Neural network models: Structure conforms to physical reality.

- ❎ Safety

- Regulations, testing, licensing.

- ❎ Alignment

- General intelligence -> Human-like intelligence.

Invention or Discovery?

❎ Constructing artificial intelligence using neural networks.

✅ Discovering the phenomena and laws of intelligence through neural networks.

With a large and deep model, a large amount of data in a certain field (image recognition, natural language understanding), the correct optimization method and sufficient computing resources, it will definitely succeed.

- Ilya Sutskever

“Simple” ≠ “Easy”!

- Seemingly simple, slow to be accepted.

- Simple ideas/methods are not easily accepted.

- Transformer

- Relatively simple structure.

- High computational saturation.

- Dynamic message passing.

Engineering Significance

Fundamental Large Models are the Source of High Model Capabilities

- GPT3, GPT4 costs are “eyewatering”!

- LLaMA

- 7.4 million A100 machine hours (856 years, $15M).

- Fundamental models are large and efficient.

- Large models are not sufficient, but necessary.

- Large models have about 1 trillion connections (the brain has 100 trillion connections).

AGI Invention 👉 Electricity/Car/Atomic Bomb Invention

- The emergence of new technologies leads to social progress.

- Not everyone knows:

- Increasing the size of autoregressive models + expanding training 🔜 Artificial General Intelligence!!!

- The eve of a surging revolution.

AGI Cambrian Explosion

Natural selection R&D vs. Human engineering R&D

— Daniel Dennett

- Infrastructure.

- Search.

- Applications and software/hardware development methods.

How to Explain “Emergent Capabilities”?

- Emergent Capabilities

- Comprehension ability.

- Common sense.

- Reflecting on reductionism.

- The physics of artificial intelligence.

- Experimental discoveries, unpredictable by theory.

- Quantitative change to qualitative change.

Computer Model of Neural Networks

- Turing machine

- Software computer.

- Engineering -> von Neumann machine.

- Memory bottleneck of the von Neumann architecture.

- Autoregressive neural networks

- Different memory types, no von Neumann bottleneck.

- 👉 Different computing chips?

The Essence of Fundamental Models

- Mapping of all knowledge (existing) / reality / laws.

- Joint probability distribution.

- Language-constructed world model.

- Network structure reflects abstract attributes of reality.

- The use of question-answering (reasoning) is a form of information retrieval.

- Information length, compression ratio -> reasoning, memory.

- Interface is embedded! Reality mapped to a one-dimensional embedded sequence.

- Vector database (Pinecone): query, retrieval (prediction).

Multimodal Applications and Unified Embeddings

- Modalities: Language, image, video, audio.

- Embeddings

- At a higher level of abstraction.

- More efficient communication.

- More efficient training/retrieval.

- Encoding facts and common sense.

- No need for synchronized multimodal data, training can be done separately using images as a medium.

- Generative models (understanding, mastering probability distributions, the basis for assumptions/reasoning).

- The physics of information and intelligence.

Meta ImageBind

- Multimodal (image, text, audio, video, infrared imaging, IMU) joint supervised learning.

- Training data does not need to be collected synchronously with all 6 modalities.

- Training data samples can be collected asynchronously.

- Performance far exceeds single-modality methods (image-based traditional methods and deep learning models).

- Generate photos of a rainforest or a farmers market from audio.

- Segment images based on sound/text (screaming to locate pedestrians in blind spots).

Applications

- Reasoning

- Adapting to applications (prompt engineering).

- Changing programming paradigms.

- Application algorithm development.

- Adapting network models

- SFT local modification and update.

- Additional networks.

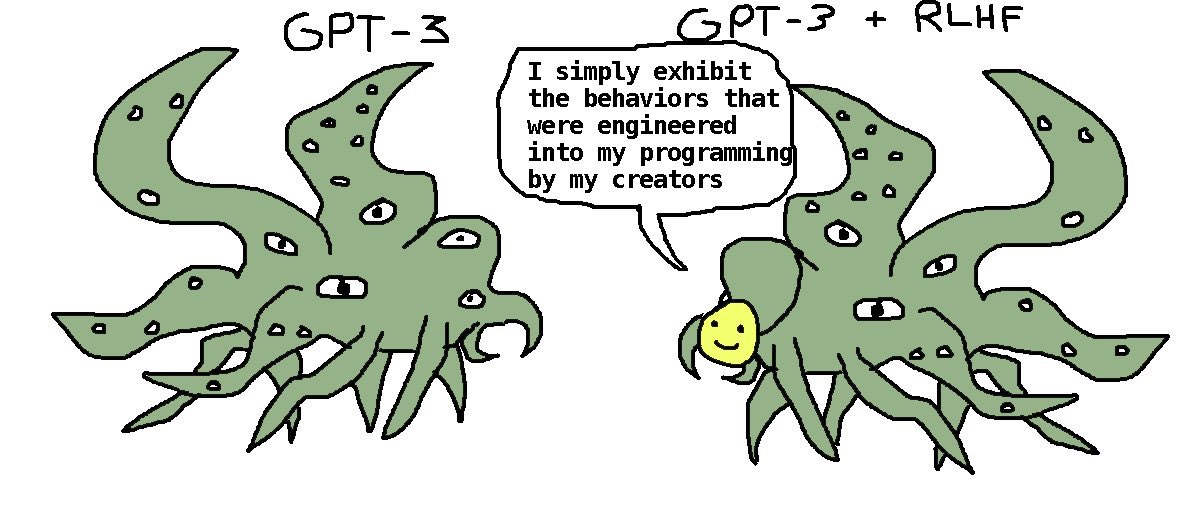

- RLHF (Reward Model & PPO)

- 👉 DPO (Direct Preference Optimization).

- Open source communities.

Shoggoth Model of Fundamental Model and Assistant Model

Reasoning Applications

Technology Stack

- Web/App conversation interface.

- App/IDE plugins.

- Programmatic interfaces:

- Front-end:

- Standalone interface (Web: Flask, Streamlit), IDE, OA plugin interface, UI logic layer.

- Back-end:

- API interface (OpenAI, OpenPilot, Google Bard), vector database interface, algorithm logic layer: CoT/ToT/Agent/prompt template library/retrieval.

- Front-end:

Prompt Engineering

- Sampling of neural networks.

- Fundamental model as a decoder.

- Database query.

- Overcoming the conversational length limitations of Transformer models (8~32k).

- Constructing external long-term memory interfaces (vectorized databases) and processing logic (LangChain).

- Programmatic data adaptation pattern: Private data embedding, vectorized storage.

- Database connection (LlamaIndex), data query.

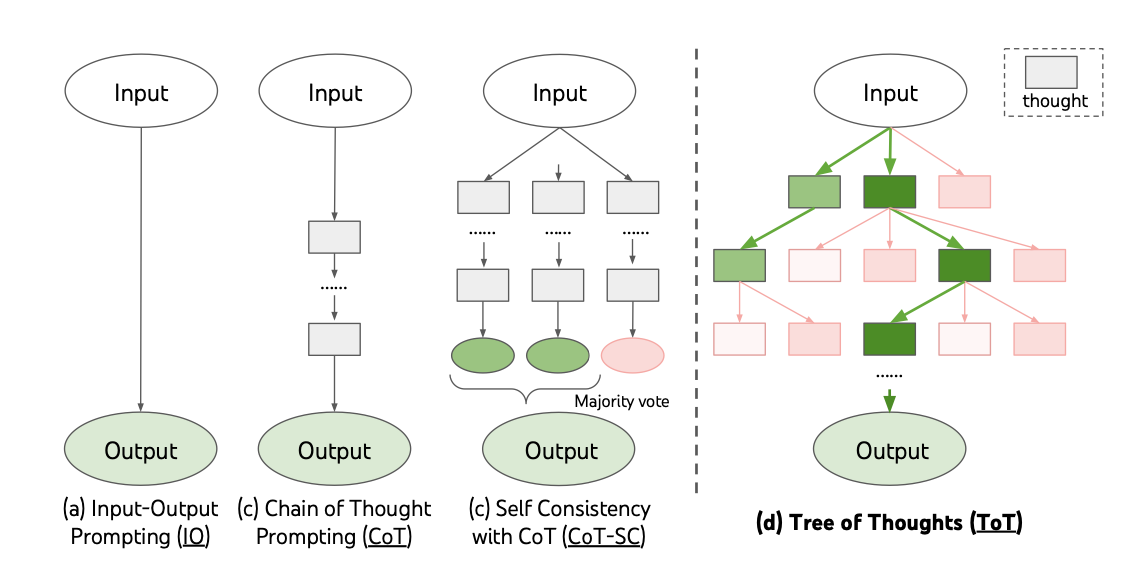

Prompt Engineering Algorithms

- Conversational format Input-Output Prompting.

- Chain of thought Chain-of-Thought.

- Tree of thought Tree-Of-Thought 👉 AlphaGo.

Autonomous Agents

- AutoGPT.

- BabyGPT.

- Programming paradigms worth paying attention to.

Customization

Fine-tuning

Everyone should learn to fine-tune LLMs.

—Mark Tenenholtz

- GPT-4 is a frozen model.

- General purpose, but not optimal in specific domains.

- High overhead.

- Fundamental models are just decoders.

- Data is fundamental.

Customizing Fundamental Models Based on Retrieval

- Enhanced retrieval based on customized vector databases.

- End-to-end retrieval augmented fundamental models.

Training and Adaptation

- Training fundamental large models “from scratch” (GPT4, Llama)

- Large datasets.

- OpenAI ~200 Engineers (Google 2000+).

- Thousands of GPUs, months.

- Supervised adaptation training/efficient parameter adaptation training (SFT/PEFT)

- Good small datasets.

- 1-100 GPUs.

- LoRA training 👉 QLoRA (2x4090, 24h@16bit).

- LLM-Adaptor.

- Attention alternative algorithms

- FlashAttention, State Space, RNN.

Distillation and Adaptation (High-quality Data Acquisition)

- Alpaca 7B

- GPT3 Davinci-003 teaches LLaMA 7B.

- 175 seed conversations –> 52,000 generated data –> SFT, <$500.

- Vicuna 13B

- ChatGPT teaches LLaMA 13B.

- 7 million conversation data (ChatGPT), @ 8xA100, 24h, ~$300.

- Koala 13B

- ChatGPT & others teach LLaMA 13B.

- ~41 million conversation data, @ 8xA100, 2 epochs, 6h, <$100.

Infrastructure

- Cloud (training & inference)

- GCP, Azure, AWS, vector database API, search API.

- Mobile (inference).

- Software development

- Front-end: Web (Flask, Streamlit), application plugins Slack/WeChat/DingTalk.

- Large language model API interface (OpenAI, Bard).

- Local

- Server, local vector database.

- Programming paradigms.

Moats

- Whether to proceed?

- How to proceed?

- How to judge whether it is too early to proceed and whether the investment is too large?

- Application reality?

- How much profit?

- Is the technology available?

- Security?

- What is the feasible cost of improvement?

Table and Text Analysis:

- OA documents, DingTalk, WeChat Work.

- Mini-programs, App customer data (table, text) analysis, summary, query.

- Transaction behavior.

- User preferences.

Entertainment Systems (Natural Language Interface HMI, Systems, Software)

- Requirements document generation (systems, software): natural language application interface.

- Hardware system design.

- System architecture design.

- Software requires human work:

- Set up the development environment.

- Manual verification (web api flask, embedded).

- Programming paradigm changes.

Intelligent Driving Projects

- Segmentation: Occluded object analysis, completion.

- Target recognition: Automatic labeling of unknown targets.

- Single image 3D information reasoning (NERF + Google Street View large model).

- Prediction, planning.

VEOS

- Cloud model scaling.

- Multimodal grasp of application scenarios and driver styles.

Time Series Fundamental Models

- Methods: Chain/Tree Of Thought, Retrieval-based LLM, local modification.

- Control (behavioral feedback) fundamental models (reinforcement learning).

- Active inference.

- Embodied AI

- The key to application and research.

- Time series embedding, richer world model.

- General intelligence distills objective physical laws.

- Using embedded knowledge and laws to understand causality and spacetime.

Conclusion

- No algorithmic moat.

- Computing resource limitations are almost negligible.

- Data collection and collation.

- Operations, products, development integration.

Accept the new reality.